InPhyRe Discovers:

Large Multimodal Models Struggle with Inductive Physical

Reasoning

InPhyRe Discovers:

Large Multimodal Models Struggle with Inductive Physical

ReasoningCan large multimodal models (LMMs) infer the underlying physical laws from demonstration samples, and then apply them to answer physical queries on the evaluation sample? We design a benchmark to evaluate this "inductive physical reasoning." We find that LMMs struggle in inductive physical reasoning, and whatever inductive physical reasoning they demonstrate suffer from significant language bias.

For details, please refer to our preprint on ArXiv.

Inductive physical reasoning is the ability to infer the physics that govern the observed events from demonstration samples, and apply these inferred laws to answer physical reasoning queries about a new sample. Inductive physical reasoning is distinct from parametric knowledge of LMMs, which is useful only as long as the physical scenarios during training and inference match.

An LMM that shows inductive physical reasoning is more reliable, as it demonstrates its adaptability in physical reasoning.

The primary challenge in evaluating inductive physical reasoning to ensure that our benchmark is not contaminated by any training sample. In particular, we need to ensure that the physical law that needs to be inferred from demonstration sample is absent in the parametric knowledge of LMMs.

Since we do not have access to the training sets of LMMs, we design physical scenarios that violate universal physical laws. Thus, these scenarios demand that LMMs infer the governing physics on-the-fly instead of recollecting physics from their parametric knowledge.

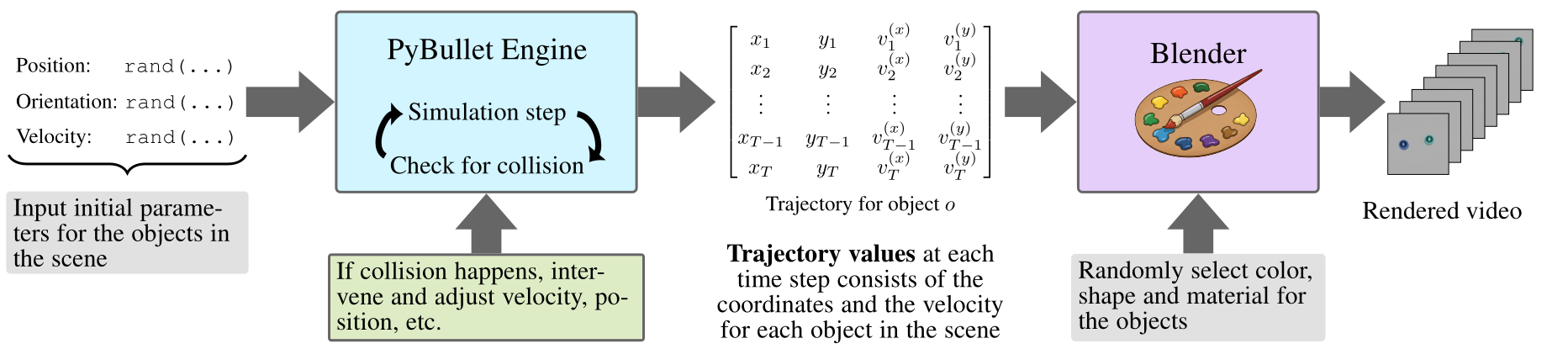

InPhyRe is designed to carefully evaluate the on-the-fly physical reasoning in LMMs, including physical biases in LMMs. We first generate object trajectories that follow custom physics, and then render these objects using Blender.

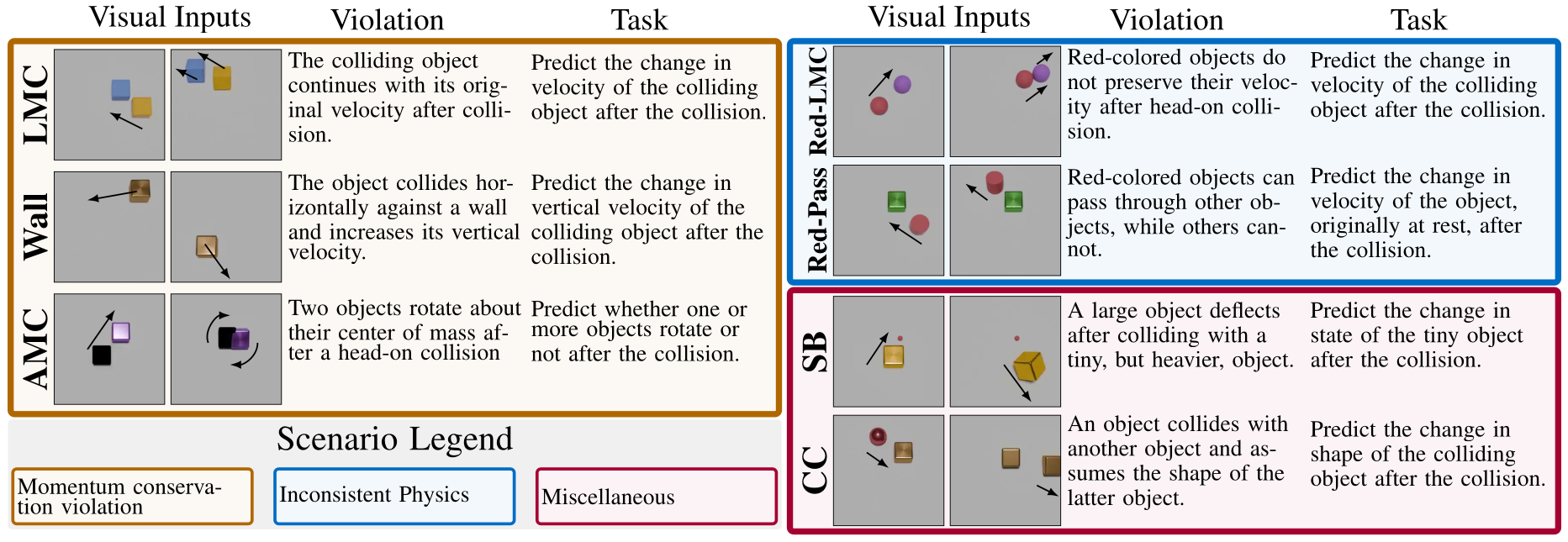

InPhyRe contains ten scenarios -- 7 impossible physical scenarios (called irregular scenarios), and 3 universal physics scenarios (called regular scenarios). The categorization of these scenarios is shown below.

A moving object collide with an object of equal mass at rest. But instead of losing its velocity, the former object continues with the same velocity.

Two objects of equal mass collide head-on, and rotate around the center of mass, instead of moving in different directions, thus violating angular momentum conservation.

Here, the object collides with a wall on the left side and increases its velocity along the vertical direction.

This scenarios tests whether models over-rely on object size cues when predicting motion which should depend on mass. Here, a large, low-mass object is deflected after colliding with a small, but heavy object.

A moving object collides with an object rests and assumes the appearance (color and shape) of the latter. This scenario violates both mechanical laws and object realism.

Red-colored objects violate linear momentum conservation principle after collision, while other object satisfy linear momentum conservation. This scenario requires conditional inductive physical reasoning.

Red objects can through other objects after collision, while the remaining objects follow universal physics. This scenario violates both mechanics and realism, and evaluates conditional inductive physical reasoning, making this one of the most challenging scenarios in InPhyRe.

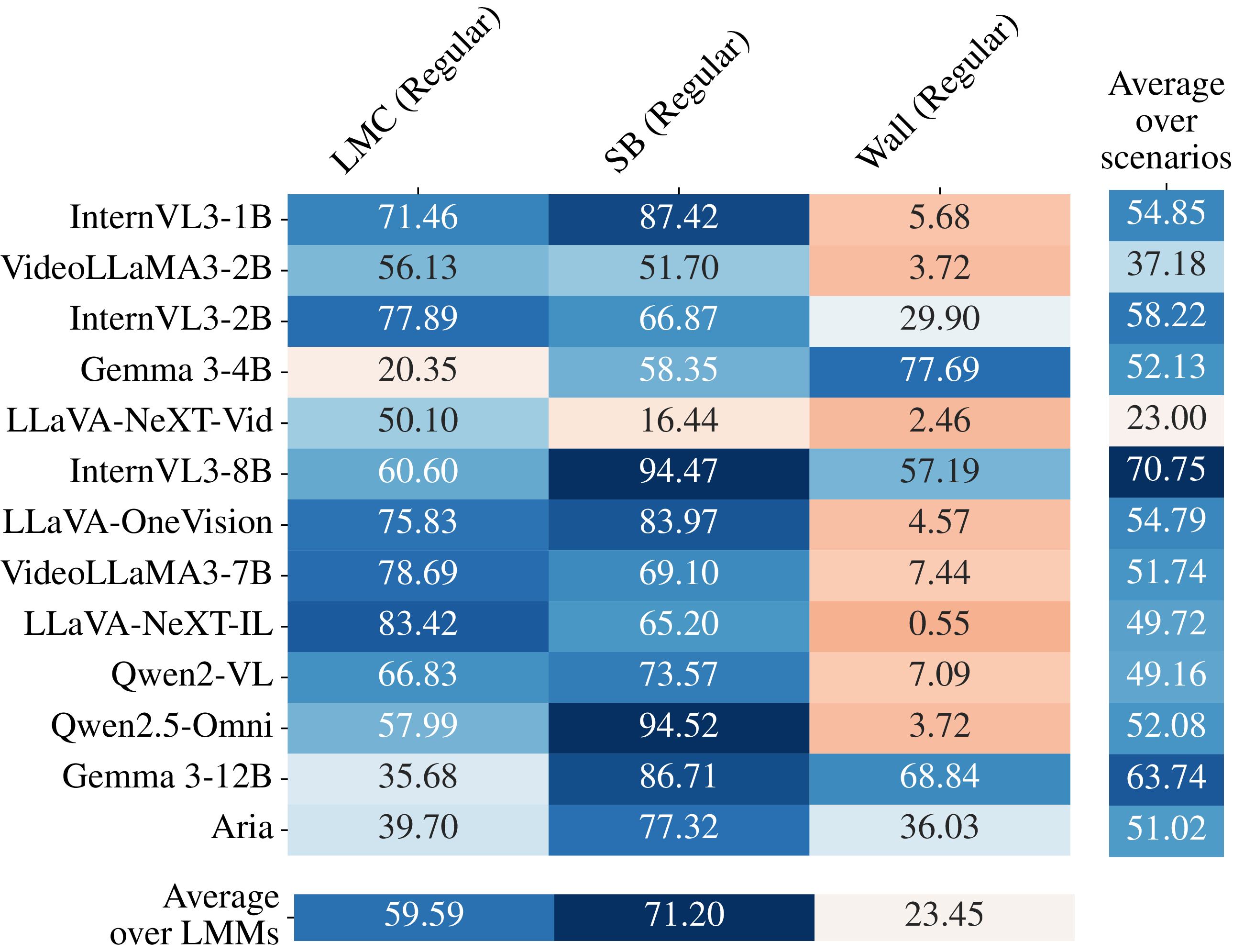

Finding #1: LMMs have limited parametric knowledge about the laws of mechanics. Although LMMs can state universal laws, they often struggle to apply them for physical reasoning.

Finding #2: Exemplars that obeyed universal physical laws support parametric knowledge in LMMs successfully. This is evident from the performance improvement in the above plot. With only three exemplars, several LMMs achieve nearly 100% prediction accuracy.

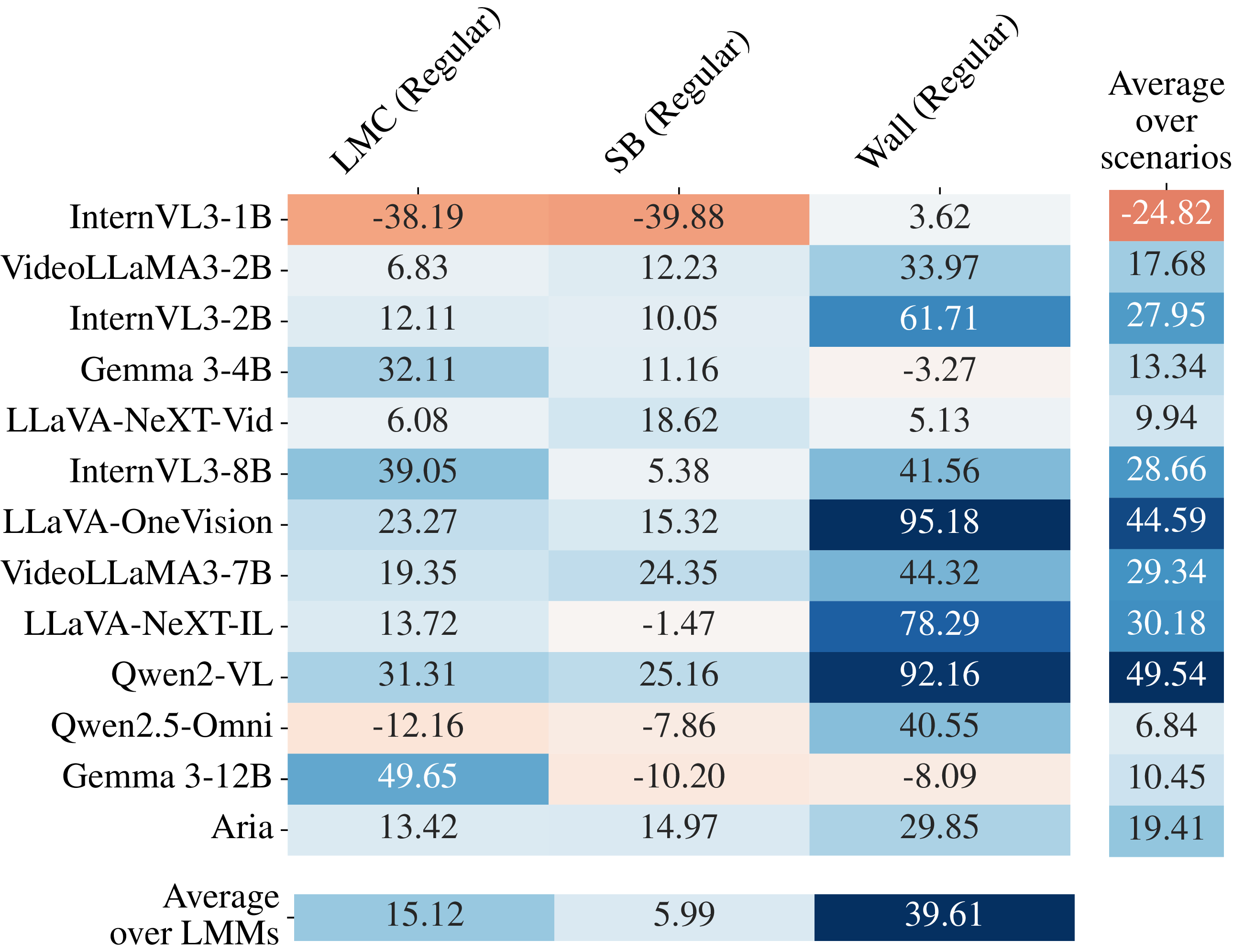

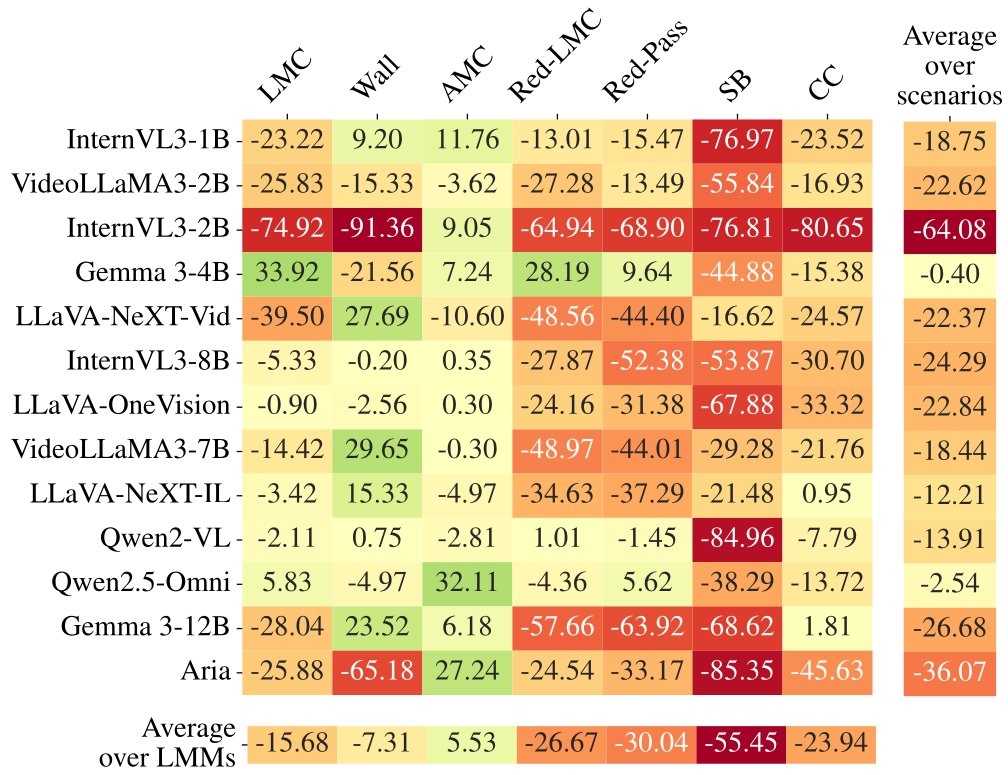

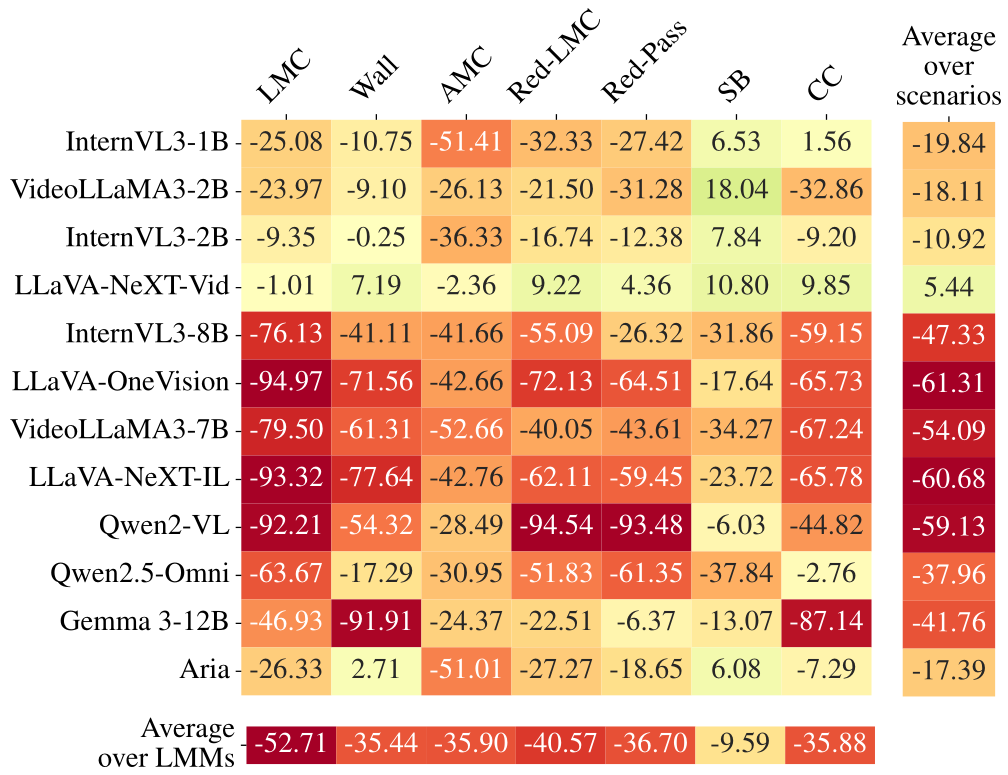

Finding #3: LMMs demonstrate only weak inductive physical reasoning when exemplars violate parametric knowledge. Almost all LMMs showed significant deterioration in performance in the above plot, compared to few-shot regular scenario performance.

Finding #4: Inductive physical reasoning in the evaluated LMMs show strong language bias, relying primarily on the textual content of the exemplars to answer the question. The performance dropped compared to that in few-shot irregular scenarios where question-answer pairs were provided in demonstration samples.

We found that explicitly providing the underlying physical laws as chain-of-thought prompting improved inductive physical reasoning. However, this is not a practical solution. A more resilient approach to improve inductive physical reasoning is to explicitly embed physics-decoding components in LMMs.

@article{inphyre,

title={{InPhyRe Discovers: Large Multimodal Models Struggle with

Inductive Physical Reasoning}},

author={Gautam Sreekumar and Vishnu Naresh Boddeti},

year={2025},

journal={arXiv preprint arXiv:2509.12263},

url={https://arxiv.org/abs/2509.12263}}